Abstract

Garment sewing pattern represents the intrinsic rest shape of a garment, and is the core for many applications like fashion design, virtual try-on, and digital avatars. In this work, we explore the challenging problem of recovering garment sewing patterns from daily photos for augmenting these applications. To solve the problem, we first synthesize a versatile dataset, named SewFactory, which consists of around 1M images and ground-truth sewing patterns for model training and quantitative evaluation. SewFactory covers a wide range of human poses, body shapes, and sewing patterns, and possesses realistic appearances thanks to the proposed human texture synthesis network. Then, we propose a two-level Transformer network called SewFormer, which significantly improves the sewing pattern prediction performance. Extensive experiments demonstrate that the proposed framework is effective in recovering sewing patterns and well generalizes to casually-taken human photos.

SewFactory

SewFactory

We present a new dataset, SewFactory, for sewing pattern recovery from a single image. A comprehensive comparison betwee SewFactory and other existing garment datasets can be found in the below table. Notably, SewFactory possesses high pose variability and a diverse range of garments and human textures, which effectively closes the domain gap with real-word inputs.

| Dataset | Real/Syn | #Garment | Pose Var | Sewing Pattern | G-Texture Var | H-Texture Var |

|---|---|---|---|---|---|---|

| MGN | Real | 712 | Low | None | Low | Low |

| DeepFashion3D | Real | 563 | Low | None | Low | None |

| 3DPeople | Syn | 80 | High | None | Low | Low |

| CLOTH3D | Syn | 11.3k | High | None | High | Low |

| Wang et al. | Syn | 8k | None | Yes | Low | None |

| Korosteleva and Lee | Syn | 22.5K | None | Yes | Low | None |

| Ours | Syn | 19.1K | High | Yes | High | High |

SewFormer

SewFormer

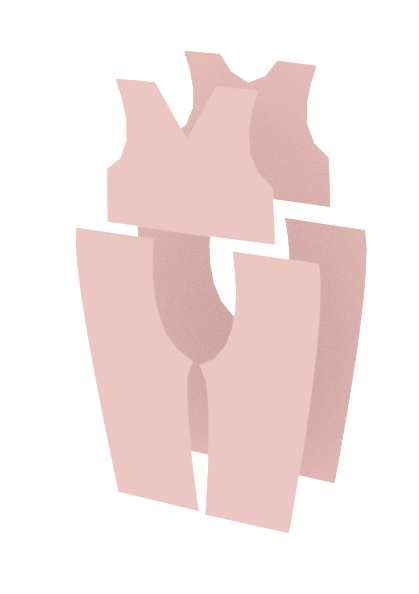

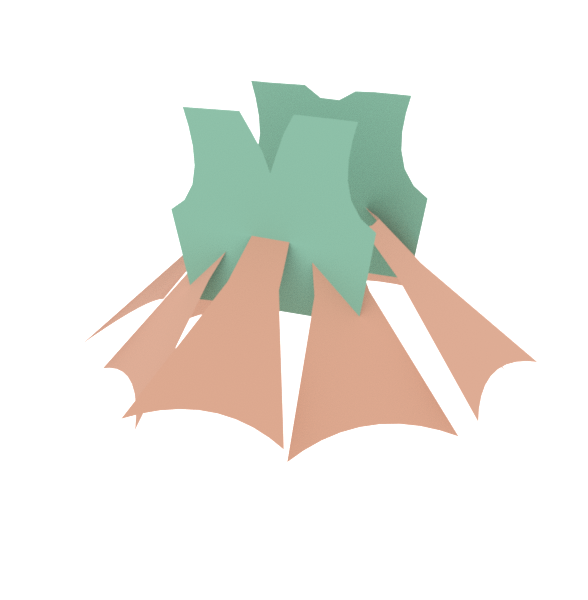

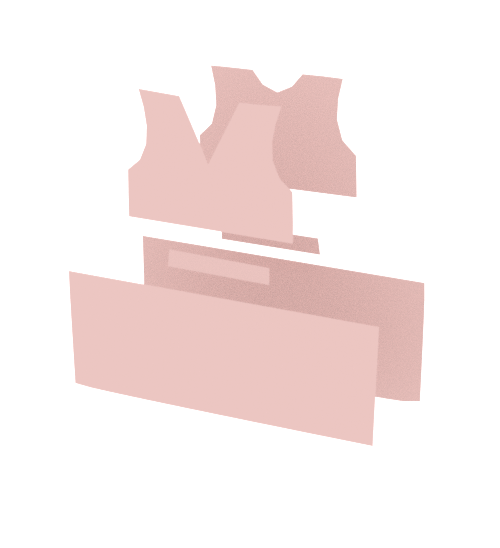

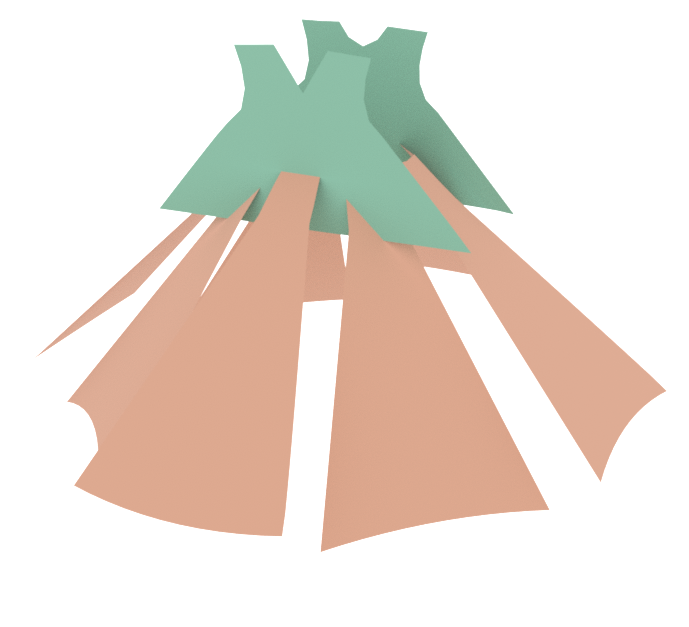

As the figure shown, SewFormer consists of three main components: (a) a visual encoder to learn sequential visual representions from the input image, (b) a two-level Transformer decoder to obtain the sewing pattern in a hierarchical manner, and (c) a stitch prediction module that recovers how different panels are stitched together to form a garment.

Result Examples

Result Examples

We showed some garment reproduction and editing results here. Each row shows an example. The first column is the input RGB image, and the second column is the sewing pattern recovered by our model. The third column is the corresponding normal map rendered based on the simulated 3D mesh and the last column shows some editing based on the recovered model.

|

|

|||

|

|

|||

|

|

|||

|

|

|||

| Input Image | Sewing Pattern | Reconstruction | Editing |

|---|

BibTeX

@article{liu2023sewformer,

author = {Liu, Lijuan and Xu, Xiangyu and Lin, Zhijie and Liang, Jiabin and Yan, Shuicheng},

title = {Towards Garment Sewing Pattern Reconstruction from a Single Image},

journal = {ACM Transactions on Graphics (SIGGRAPH Asia)},

year = {2023}

}